UPDATE: please check my more recent post about this problem and the information I’ve got at the Oracle Demo Grounds during OOW14: http://www.ludovicocaldara.net/dba/demo-grounds-clone-pdb-rw/

I feel the strong need to blog abut this very recent problem because I’ve spent a lot of time debugging it… especially because there’s no information about this error on the MOS.

Introduction

For a lab, I have prepared two RAC Container databases in physical stand-by.

Real-time query is configured (real-time apply, standby in read-only mode).

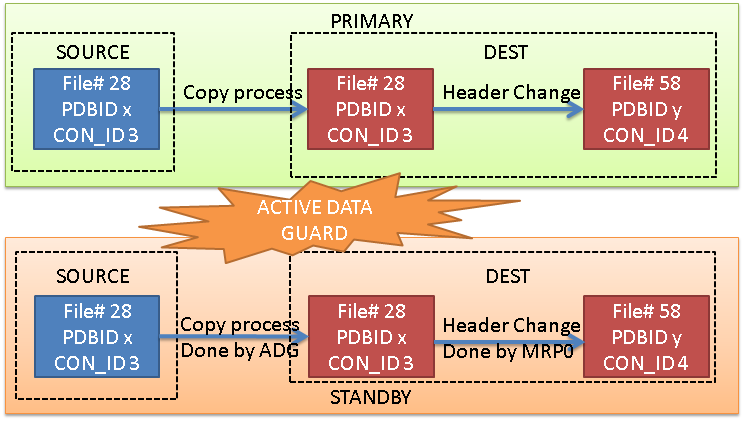

Following the doc, http://docs.oracle.com/database/121/SQLRF/statements_6010.htm#CCHDFDDG, I’ve cloned one local pluggable database to a new PDB and, because Active Data Guard is active, I was expecting the PDB to be created on the standby and its files copied without problems.

BUT! I’ve forgot to put my source PDB in read-only mode on the primary and, strangely:

- The pluggable database has been created on the primary WITHOUT PROBLEMS (despite the documentation explicitly states that it needs to be read-only)

- The recovery process on the standby stopped with error.

Recovery copied files for tablespace SYSTEM Recovery successfully copied file +DATA/CDBGVA/01B838F74693443FE053334EA8C03527/DATAFILE/system.437.856805523 from +DATA/CDBGVA/01B431F9BDF51AB7E053334EA8C06877/DATAFILE/system.435.856802413 MRP0: Background Media Recovery terminated with error 1274 Thu Aug 28 17:32:05 2014 Errors in file /u01/app/oracle/diag/rdbms/cdbgva/CDBGVA_1/trace/CDBGVA_1_mrp0_13949.trc: ORA-01274: cannot add data file that was originally created as '+DATA/CDBATL/01B838F74693443FE053334EA8C03527/DATAFILE/system.477.856805517' ORA-19729: File 22 is not the initial version of the plugged in datafile Thu Aug 28 17:32:05 2014

Now, the primary had all its datafiles (the new PDB has con_id 4):

CON_ID NAME ---------- ---------------------------------------------------------------------------------------------------- 1 +DATA/CDB/DATAFILE/system.283.854626623 1 +DATA/CDB/DATAFILE/undotbs1.290.854627639 1 +DATA/CDB/DATAFILE/users.291.854627695 1 +DATA/CDB/DATAFILE/undotbs2.287.854627063 1 +DATA/CDB/DATAFILE/sysaux.285.854626879 2 +DATA/CDB/FFBCECBB503D606BE043334EA8C019B7/DATAFILE/sysaux.286.854627011 2 +DATA/CDB/FFBCECBB503D606BE043334EA8C019B7/DATAFILE/system.284.854626785 3 +DATA/CDBATL/00B29F47A2D71CC2E053334EA8C03B13/DATAFILE/sysaux.390.855681795 3 +DATA/CDBATL/00B29F47A2D71CC2E053334EA8C03B13/DATAFILE/system.389.855681795 4 +DATA/CDBATL/01B431F9BDF51AB7E053334EA8C06877/DATAFILE/sysaux.459.856788061 4 +DATA/CDBATL/01B431F9BDF51AB7E053334EA8C06877/DATAFILE/system.458.856788061

and the standby was missing the datafiles of the new PDB:

1* select con_id, name from v$datafile order by 1 CON_ID NAME ---------- ---------------------------------------------------------------------------------------------------- 1 +DATA/CDBGVA/DATAFILE/system.319.855054997 1 +DATA/CDBGVA/DATAFILE/undotbs2.283.855055141 1 +DATA/CDBGVA/DATAFILE/users.285.855055149 1 +DATA/CDBGVA/DATAFILE/undotbs1.284.855055145 1 +DATA/CDBGVA/DATAFILE/sysaux.281.855055061 2 +DATA/CDBGVA/FFBCECBB503D606BE043334EA8C019B7/DATAFILE/sysaux.282.855055127 2 +DATA/CDBGVA/FFBCECBB503D606BE043334EA8C019B7/DATAFILE/system.280.855055053 3 +DATA/CDBGVA/00B29F47A2D71CC2E053334EA8C03B13/DATAFILE/sysaux.363.855681865 3 +DATA/CDBGVA/00B29F47A2D71CC2E053334EA8C03B13/DATAFILE/system.362.855681863

But, on the standby database, the PDB somehow was existing.

16:20:58 SYS@CDBGVA_1> select name from v$pdbs; NAME ------------------------------ PDB$SEED MAAZ LUDO

I’ve tried to play a little, and finally decided to disable the recovery for the PDB (new in 12.1.0.2).

But to disable the recovery I was needing to connect to the PDB, but the PDB was somehow “inexistent”:

16:21:35 SYS@CDBGVA_1> alter session set container=LUDO; ERROR: ORA-65011: Pluggable database LUDO does not exist. 16:21:39 SYS@CDBGVA_1> select name, open_mode from v$pdbs; NAME OPEN_MODE ------------------------------ ---------- PDB$SEED READ ONLY MAAZ MOUNTED LUDO MOUNTED

So I’ve tried to drop it, but off course, the standby was read-only and I could not drop the PDB:

16:22:01 SYS@CDBGVA_1> drop pluggable database ludo; drop pluggable database ludo * ERROR at line 1: ORA-16000: database or pluggable database open for read-only access

Then I’ve shutted down the standby, but one instance hung and I’ve needed to do a shutdown abort (I don’t know if it was related with my original problem..)

# [ oracle@racb02:/u01/app/oracle/diag/rdbms/cdbgva/CDBGVA_1/trace [16:22:45] [12.1.0.2.0 EE SID=CDBGVA_1] 1 ] # # srvctl stop database -d CDBGVA -o immediate [HANGS]

After mounting again the standby, the PDB was also accessible:

SQL*Plus: Release 12.1.0.2.0 Production on Thu Aug 28 16:30:19 2014 Copyright (c) 1982, 2014, Oracle. All rights reserved. Connected to: Oracle Database 12c Enterprise Edition Release 12.1.0.2.0 - 64bit Production With the Partitioning, Real Application Clusters, Automatic Storage Management, OLAP, Advanced Analytics and Real Application Testing options 16:30:19 SYS@CDBGVA_1> alter session set container=LUDO; Session altered.

So I’ve been able to disable the recovery:

16:31:19 SYS@CDBGVA_1> alter pluggable database ludo disable recovery; Pluggable database altered.

Then, on the primary, I’ve took a fresh backup of the involved datafiles:

RMAN> backup as copy datafile 16,17 format '/tmp/%f.dbf'; Starting backup at 28-AUG-14 using target database control file instead of recovery catalog allocated channel: ORA_DISK_1 channel ORA_DISK_1: SID=88 instance=CDBATL_2 device type=DISK channel ORA_DISK_1: starting datafile copy input datafile file number=00017 name=+DATA/CDBATL/01B431F9BDF51AB7E053334EA8C06877/DATAFILE/sysaux.459.856788061 output file name=/tmp/17.dbf tag=TAG20140828T163251 RECID=4 STAMP=856801976 channel ORA_DISK_1: datafile copy complete, elapsed time: 00:00:07 channel ORA_DISK_1: starting datafile copy input datafile file number=00016 name=+DATA/CDBATL/01B431F9BDF51AB7E053334EA8C06877/DATAFILE/system.458.856788061 output file name=/tmp/16.dbf tag=TAG20140828T163251 RECID=5 STAMP=856801981 channel ORA_DISK_1: datafile copy complete, elapsed time: 00:00:03 Finished backup at 28-AUG-14 Starting Control File and SPFILE Autobackup at 28-AUG-14 piece handle=+DATA/CDBATL/AUTOBACKUP/2014_08_28/s_856801982.471.856801983 comment=NONE Finished Control File and SPFILE Autobackup at 28-AUG-14

and I’ve copied and cataloged the copies to the controlfile:

RMAN> catalog start with '/tmp/1'; searching for all files that match the pattern /tmp/1 List of Files Unknown to the Database ===================================== File Name: /tmp/17.dbf File Name: /tmp/16.dbf Do you really want to catalog the above files (enter YES or NO)? yes cataloging files... cataloging done List of Cataloged Files ======================= File Name: /tmp/17.dbf File Name: /tmp/16.dbf

but the restore was impossible, because the controlfile was not knowing these datafiles!!

16:38:48 SYS@CDBGVA_1> select file# from v$datafile;

FILE#

----------

1

2

3

4

5

6

7

10

11

RMAN> run {

2> set newname for datafile 16 to new;

3> set newname for datafile 17 to new;

4> restore datafile 16,17;

5> }

executing command: SET NEWNAME

executing command: SET NEWNAME

Starting restore at 28-AUG-14

allocated channel: ORA_DISK_1

channel ORA_DISK_1: SID=64 instance=CDBGVA_1 device type=DISK

RMAN-00571: ===========================================================

RMAN-00569: =============== ERROR MESSAGE STACK FOLLOWS ===============

RMAN-00571: ===========================================================

RMAN-03002: failure of restore command at 08/28/2014 16:37:02

RMAN-20201: datafile not found in the recovery catalog

RMAN-06010: error while looking up datafile: 17

RMAN> exit

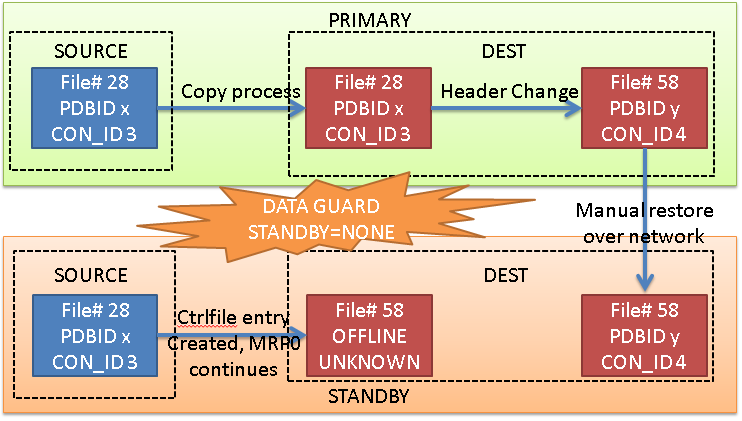

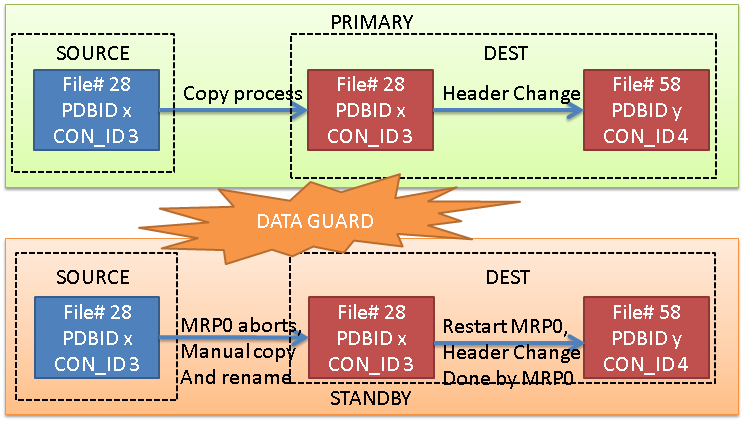

So I’ve RESTARTED the recovery for a few seconds, and because the PDB had the recovery disabled, the recovery process has added the datafiles and set them offline.

16:38:08 SYS@CDBGVA_1> alter database recover managed standby database ; alter database recover managed standby database * ERROR at line 1: ORA-16043: Redo apply has been canceled. ORA-01013: user requested cancel of current operation 16:38:48 SYS@CDBGVA_1> select file# from v$datafile; FILE# ---------- 1 2 3 4 5 6 7 10 11 16 17

Then I’ve been able to restore the datafiles

RMAN> run {

2> set newname for datafile 16 to new;

3> set newname for datafile 17 to new;

4> restore datafile 16,17;

5> }

executing command: SET NEWNAME

executing command: SET NEWNAME

Starting restore at 28-AUG-14

using target database control file instead of recovery catalog

allocated channel: ORA_DISK_1

channel ORA_DISK_1: SID=33 instance=CDBGVA_1 device type=DISK

channel ORA_DISK_1: restoring datafile 00016

input datafile copy RECID=21 STAMP=856802136 file name=/tmp/16.dbf

destination for restore of datafile 00016: +DATA

channel ORA_DISK_1: copied datafile copy of datafile 00016

output file name=+DATA/CDBGVA/01B431F9BDF51AB7E053334EA8C06877/DATAFILE/system.435.856802413 RECID=22 STAMP=856802416

channel ORA_DISK_1: restoring datafile 00017

input datafile copy RECID=20 STAMP=856802136 file name=/tmp/17.dbf

destination for restore of datafile 00017: +DATA

channel ORA_DISK_1: copied datafile copy of datafile 00017

output file name=+DATA/CDBGVA/01B431F9BDF51AB7E053334EA8C06877/DATAFILE/sysaux.355.856802417 RECID=23 STAMP=856802421

Finished restore at 28-AUG-14

RMAN>

RMAN> switch datafile 16, 17 to copy;

datafile 16 switched to datafile copy "+DATA/CDBGVA/01B431F9BDF51AB7E053334EA8C06877/DATAFILE/system.435.856802413"

datafile 17 switched to datafile copy "+DATA/CDBGVA/01B431F9BDF51AB7E053334EA8C06877/DATAFILE/sysaux.355.856802417"

RMAN>

Finally, I’ve enabled again the recovery for the PDB and restarted the apply process.

16:41:14 SYS@CDBGVA_1> alter session set container=LUDO; Session altered. 16:41:19 SYS@CDBGVA_1> alter pluggable database ludo enable recovery; Pluggable database altered.

Lesson learned: if you want to clone a PDB never, ever, forget to put your source PDB in read-only mode or you’ll have to deal with it!!

We could not miss this RAC Attack tradition!

We could not miss this RAC Attack tradition!

).

).